- Blog

- Taming "Lazy" ChatGPT : Strategies for Comprehensive and Reliable Outputs

Taming "Lazy" ChatGPT : Strategies for Comprehensive and Reliable Outputs

You're a developer or technical professional who relies on AI language models like GPT-4 to assist with coding tasks, research, and analysis. But lately, you've been noticing a frustrating trend - the AI seems to be getting lazier and less reliable. It provides truncated code snippets with placeholders instead of complete implementations. It omits crucial details or forgets entire sections of examples you provide. It confuses functions and parameters, invents non-existent properties, and makes silly mistakes that force you to spend more time manually fixing its output than if you'd just done it yourself from the start.

You've tried everything - tweaking the temperature and top-p sampling settings, adding verbose prompts with explicit instructions, even lecturing the AI sternly. But no matter what you do, it keeps dropping the ball, leaving you with half-baked, inconsistent responses that require heavy reworking. The whole point of leveraging AI was to save time and reduce your workload, but now you're spending more effort babysitting and course-correcting than you are getting productive assistance.

The inconsistency is maddening - one moment the AI will blow you away with a brilliant, thorough implementation. The next, it will choke on a seemingly simpler task, providing an incomplete, low-effort output filled with head-scratching omissions and errors. You've started questioning whether the latest AI models are actually a step backwards in terms of coding reliability.

What if I told you that the root cause of the AI's laziness often lies not with the model itself, but with the prompts and context you're providing? Just like human communication, clear, specific, and well-framed prompts are essential for getting comprehensive, high-quality results from AI assistants. By mastering prompt engineering techniques and understanding the key factors that influence AI outputs, you can overcome the laziness and inconsistency - transforming the AI into a tireless, reliable, and invaluable coding partner.

1. Provide Exhaustive Context and Examples

AI models excel when given rich, detailed context that leaves no ambiguity about the requirements. Provide the model with:

Thorough explanations of the task, use case, and desired output

Multiple worked examples demonstrating the level of comprehensiveness expected

Explicit instructions on aspects to include (e.g. error handling, validation logic)

Supporting files, documentation, or knowledge bases it should reference

The more context you give an AI upfront, the less likely it is to make lazy assumptions or omissions.

Example Prompt

I need you to generate a complete implementation of a web scraper in Python that extracts job listings from https://www.indeed.com. The scraper should:

1. Accept search parameters like job title, location, etc.

2. Navigate to the Indeed search results page for those parameters

3. Extract the job title, company, location, salary (if listed), and link for each result

4. Save the extracted data to a CSV file

I've included an example of the desired CSV output format:

Job Title, Company, Location, Salary, URL

Software Engineer, ACME Corp, New York, NY, $120,000, https://www.indeed.com/job?id=1234

Senior Developer, Globex, San Francisco, CA, , https://www.indeed.com/job?id=5678

The scraper must implement robust error handling, including:

- Checking for and handling potential blocking from Indeed

- Retrying failed requests with exponential backoff

- Logging all errors to a file for debugging

Please provide a complete, production-ready implementation following best practices for Python web scraping. Do not use any placeholders or pseudocode - every function, class, and logic block must have its actual implementation included.

This prompt provides:

A clear explanation of the task (building a web scraper for Indeed job listings)

The specific requirements and expected functionality

An example of the desired output format

Instructions on aspects to include (error handling, validation, best practices)

By giving the model this level of context upfront, it has everything it needs to generate a comprehensive, production-ready implementation without making lazy assumptions or omissions.

Common Pitfall

A common mistake is providing too little context and letting the AI make assumptions, like:

Build a web scraper to extract job listings from Indeed.comThis open-ended prompt without any specifics will likely lead to an incomplete, barebones implementation that falls short of your actual requirements.

Pro Tip

For even more context, you can include examples of the expected code quality and structure. Add a short sample implementation demonstrating your standards for aspects like:

Docstrings and code comments

Variable/function naming conventions

Separation of concerns and code organization

Use of OOP patterns or functional programming styles

The more examples you provide upfront, the better the model can understand and match your expectations for the final output.

2. Use Directive Prompting

The phrasing and framing of your prompts significantly impacts the AI's behavior. Use directive language that leaves no room for interpretation:

"You must provide a complete, end-to-end implementation without any placeholders."

"Do not skip or omit any part of the requirements."

"Include comprehensive error handling, validation logic, and best practices."

"Your output should be ready to deploy to production without any modifications."

You can also try "scarce" prompting by explicitly calling out what the AI should avoid:

"Do NOT use ellipses, comments, or pseudocode as placeholders."

"Do NOT truncate or provide partial implementations."

"Do NOT omit any functions, classes, or logic specified in the requirements."

Example Prompt

You are an expert language translator tasked with translating a JSON file from English to Spanish.

I have provided the original JSON file for you to translate. Your job is to output a NEW JSON file with an identical structure, but where ALL text fields have been translated to Spanish.

You MUST NOT use ellipses (...) or any other placeholders to truncate or omit portions of the translation. Every single text field in the JSON MUST be fully translated to Spanish in your output, no exceptions.

I will be using your translated JSON output for production purposes, so it MUST be complete and accurate. Do NOT provide a partial or low-effort translation under any circumstances.

This prompt uses very directive language emphasizing that the AI must not use ellipses or placeholders, and must fully translate every single text field to Spanish. It specifies requirements around proper formatting, structure preservation, and high translation quality.

The emphatic phrasing "MUST NOT", "no exceptions", "Do NOT provide partial", etc. leaves no room for lazy interpretation - a complete, high-quality translation is the only acceptable output.

Common Pitfall

A common pitfall is giving the AI an open-ended, ambiguous prompt like:

Here is a JSON file with product listings in English. Can you translate this to Spanish for me?

With such a vague prompt, the AI is likely to provide a lazy, truncated translation using ellipses for larger text fields, under the assumption that you don't need a comprehensive translation of the entire file.

Pro Tip

Emphasis of any kind can be very useful for highlighting important context. Beyond just directive language, you can:

Use UPPERCASE for critical requirements

Bold or italicize key points like this or this

Add euphonic stresses like "You MUST, MUST, MUST..."

Use typographic markers like ❗️ or ⚠️

Anything that makes part of the prompt stand out more is likely to increase the AI's focus and attention on those aspects when generating its output.

For example:

You MUST ❗️❗️❗️ implement comprehensive error handling and input validation. Do NOT skip this under ANY circumstances! Your code is expected to be **production-ready** with **zero** missing functionality.

The combination of uppercasing, euphonic triplets, and typographic markers highlights the non-negotiable nature of this requirement for the AI.

Example

Let's see how we can get GPT-4 to print out an entire JSON file where it is previously truncating the results:

3. Use N-Shot Prompting with Output Examples

Another powerful technique is to provide the AI with multiple examples of the desired output upfront, a method known as "n-shot prompting." This demonstrates the exact level of detail and comprehensiveness you expect, guiding the AI to match that standard.

For example, if you need the AI to generate code following specific conventions, you can prefix or postfix your prompt with 2-3 examples showing your preferred code formatting, documentation, and organizational structure.

The AI will then learn from those examples and apply the same patterns to its own generated output.

4. Adjust Temperature and Top-P

The temperature and top-p sampling parameters control the randomness and creativity of the AI's outputs. If you're getting lazy or inconsistent results, adjusting these parameters can help:

Temperature: Controls the randomness/creativity, between 0 and 2 and defaults to 1. Higher values (e.g. 0.9) make the output more random and creative. Lower values (e.g. 0.2) make it more predictable and repetitive.

Top-P: Controls the "riskiness" of sampled tokens, defaults to 1. Higher values allow more unpredictable/riskier token choices. Lower values (e.g. 0.5) restrict to safer, more common token choices.

For coding tasks where you want comprehensive and consistent outputs, you generally want:

Low temperature (0 - 0.5)

Moderate top-p (0 - 0.6)

This reduces randomness/creativity in favor of more predictable, reliable outputs based on the patterns in the training data.

5. Specify Output Constraints

Another way to push the AI toward more comprehensive outputs is by setting clear quantitative constraints, such as:

"Your list must include at least 50 Twitter accounts."

"Do not provide an output shorter than 2,000 words."

"Include at least 5 examples demonstrating different use cases."

Example Prompt

Let's contrast a vague prompt:

Give me a list of twitter accounts to follow on Indie Hacking

With a more constrained prompt:

Give me a list of twitter accounts to follow on Indie Hacking. There should be at least 50 accounts and give me a brief description of at least 140 characters on why I should follow each account.

The vague prompt is likely to result in a lazy, short-list of accounts without much detail whereas the constrained prompt sets clear quantitative targets the AI must aim for - at least 50 accounts, each with a 140+ character description.

6. Leverage Multiple Models for Complex Tasks

For complex, multi-step tasks that require different strengths, you can leverage the unique capabilities of multiple AI models in a consecutive prompting workflow:

Ask GPT-4 to outline and summarize the key steps and high-level components

Feed that summary to GPT-3.5 or another model better suited for detailed implementations, and have it generate the initial code/output

Pass that implementation back to GPT-4 for review, refinement, and finalization

This "divide-and-conquer" approach takes advantage of the distinct proficiencies of different models. GPT-4 excels at high-level reasoning, planning, and task decomposition. In contrast, GPT-3.5 has a propensity for more comprehensive, verbose outputs once given proper scaffolding.

By using GPT-4 to first distill the requirements into a clear summary, you provide the right framing for GPT-3.5 to then construct a detailed, initial implementation. GPT-4 can then review that output through its reasoning lens to refine and finalize the implementation.

Reasoning

Leverage Specialized Skills Different AI models have specialized skills based on their training data and architectures. GPT-4 is optimized for general task reasoning and multi-modal inputs. GPT-3.5 is a highly capable language model adept at generating long-form content.

By chaining their outputs, you combine their respective strengths - GPT-4's high-level planning combined with GPT-3.5's propensity for detail and comprehensiveness.

Cost Optimization (For Chat Completion API Users) Inference on GPT-4 is more expensive than GPT-3.5. By judiciously leveraging GPT-4 only for the planning/refinement stages, you can optimize costs while still benefiting from its high-level reasoning abilities.

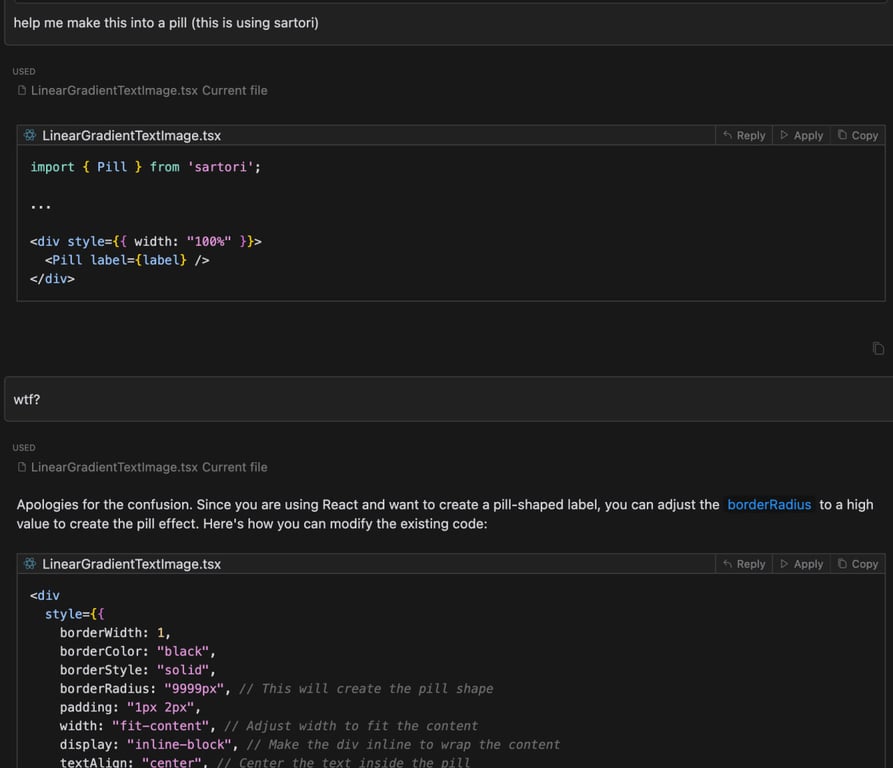

7. Incorporate "Punishment" Feedback Loops

If an AI assistant continues providing lazy, low-effort, or nonsensical responses despite your best prompting efforts, you may need to implement "punishment" feedback loops.

The idea is to firmly pushback and express dissatisfaction when the AI's output is subpar or ridiculous. This signals to the AI that its response was unacceptable and prompts it to try again from scratch, hopefully with more care and effort.

Without that pushback signal, the AI may have continued down the wrong path, providing more examples using the non-existent function under the assumption its initial response was sufficient.

Reasoning

While typically not recommended for general conversation, using terse "punishment" phrases like:

"That's ridiculous, try again."

"Nope, that output is lazy/incomplete."

"This is not what I asked for at all."

"WTF?"

"Fxxx Off!"

Can be effective for quickly correcting an AI when it has gone off the rails. The harshness breaks the AI's pattern of continuing with low-effort responses.

Over time, consistently punishing poor outputs while rewarding high-quality ones can reinforce the behavior you want from the AI through an operant conditioning feedback loop.

Caveats

This punishment approach should be used judiciously and is not a substitute for proper prompting. Overly harsh language may cause unintended side effects like altering the output language and tone of voice of the response.

Clear prompting should always be the first line of defense against lazy outputs.

Tools Matters

While implementing the prompting best practices outlined above can go a long way in overcoming lazy AI outputs, managing multiple prompts, personas, and language models across different tools and workflows can quickly become cumbersome.

This is where AI platforms like Wielded can help streamline your prompting process. Wielded allows you to:

Create Custom Prompts and Personas: Save and reuse your finely-tuned prompts as custom instructions or system prompts. The Persona feature lets you quickly switch between different profiles with unique preferences, formatting conventions, and prompt templates.

Leverage Multiple Language Models: Don't be locked into a single AI like GPT-4. Many users have reported canceling their ChatGPT Plus subscriptions in favor of Anthropic's Claude due to inconsistencies. With Wielded, you can easily connect and utilize a mix of language models like Claude, GPT-3.5, GPT-4, and Google's PaLM/Gemini - picking the best model for each task.

Streamline Prompting Workflows: Implement multi-model prompting workflows, chaining outputs from different AIs using Wielded's built-in tools. Manage feedback loops, versioning, and iteration history to continuously refine your prompts over time.

By centralizing your prompting process with tools like Wielded, you can ensure comprehensive, high-quality AI outputs every time - without the hassle of juggling multiple interfaces and disconnected prompts.

If you're ready to take your AI prompting game to the next level, give Wielded a try.

Unleash the AI's Full Potential

At the end of the day, the latest AI language models are incredibly powerful tools that can supercharge your productivity and capabilities - when properly prompted and managed. By mastering prompting best practices like providing comprehensive context, using directive language, leveraging output examples, adjusting sampling parameters, and implementing feedback loops, you can overcome inconsistent or lazy outputs.

Tools like Wielded can further streamline the process, allowing you to centralize prompts, leverage multiple language models, and implement advanced prompting workflows. With the right prompting techniques and tools in your arsenal, you can unlock the true potential of AI assistants as tireless, reliable coding partners.

So go forth, prompt with precision, and tame the AI to be the invaluable assistant you need it to be. The path to unlocking their full capabilities lies in your prompting mastery.

- 1. Provide Exhaustive Context and Examples

- Example Prompt

- Common Pitfall

- Pro Tip

- 2. Use Directive Prompting

- Example Prompt

- Common Pitfall

- Pro Tip

- Example

- 3. Use N-Shot Prompting with Output Examples

- 4. Adjust Temperature and Top-P

- 5. Specify Output Constraints

- Example Prompt

- 6. Leverage Multiple Models for Complex Tasks

- Reasoning

- 7. Incorporate "Punishment" Feedback Loops

- Reasoning

- Caveats

- Tools Matters

- Unleash the AI's Full Potential

Dominate ChatGPT and Google Search

Wielded helps B2B companies with SEO & GEO using programmatic SEO approach. Book a call to find out how we help you win.